11 How to Perform Confirmation Testing with Testsigma?

- 11.1 Identify Test Cases:

- 11.2 Prepare Test Data:

- 11.3 Run Existing Test Plan:

- 11.4 Analyze Results:

- 11.5 Report Bugs:

- 11.6 Report New Issues:

What is Confirmation Testing?

Confirmation testing is a type of software testing technique in which the software-under-test is run through a set of previously run tests, just to make sure that the results are consistent & accurate. The intent is to ferret out any remaining bugs and check that all previously found bugs have been truly eliminated from the software components.

Basically, all tests run earlier are run once again, after the bugs found in the first tests have been fixed by devs. This testing is also called re-testing because it is literally running the same test twice – one before finding bugs and one after.

Generally, when testers find a bug they report it to the dev team that actually created the code. After looking through the issue, the devs fix the issue and push another version the feature. Once QAs receive this scrubbed version of the software, they run tests to check that the new code is, indeed, bug-free.

Note: While running this tests, QAs need to follow the defect report they have created earlier to inform developers of the bugs they found in the software at that stage. QAs must run the same tests, and check if any of the previous (or even new) functional anomalies show up.

What is the Purpose of Confirmation Testing?

As you know now, Confirmation testing is a vital checkpoint in software development. It ensures that issues found during earlier testing are fixed before the software goes live.

Here’s how it works:

- Focus on fixes: Testers re-run the specific tests that previously failed due to bugs. This verifies developers have addressed the reported problems.

- Improved quality: By catching lingering bugs, confirmation testing enhances the overall quality and reliability of the software. This translates to fewer crashes and a smoother user experience.

- Reduced costs: Fixing bugs early in the development lifecycle is cheaper than after release. Confirmation testing helps identify and eliminate issues before they cause problems for real users, minimizing post-release maintenance needs.

- Increased user satisfaction: Software that works as intended leads to happy users. Confirmation testing helps guarantee a positive user experience by ensuring the software functions correctly under various conditions.

What is a Confirmatory Test Example?

Let’s say a compatibility test shows that the software-under-test does not render well on the new iPhone. The bug is reported to the devs, and they eventually send back the newer version of the software/feature after fixing the bug.

Of course, you believe the devs. But you also run the SAME compatibility test once again to check if the same bug is actually eliminated permanently.

In this case, the compatibility test being run twice is a confirmation test.

When to Do Confirmation Testing?

- Right after a bug fix: Once a bug has been reported and fixed, this tests should be run to check that the bug has actually been resolved.

- Right before regression tests: Regression tests verify that code changes have not affected existing software functionalities. So, for regression to go smoothly, this tests should be run beforehand. That way, the regression tests won’t be disrupted by existing bugs.

- Right after bugs have been rejected: If a bug has been reported, and is then rejected by the dev team, these tests must be run to reproduce the bug….and report it again in greater detail.

Confirmation Testing Techniques

Confirmation testing doesn’t involve specific techniques because it essentially re-runs previous tests. However, there are key aspects to consider during the process:

Planning and Preparation:

- Test Case Selection: Identify the specific test cases that exposed the bugs reported earlier.

- Test Data: Ensure you have the same or similar test data that initially triggered the bugs.

- Defect Tracking System: Reference the bug reports to understand the exact issue and its reported behavior.

Execution:

- Re-run Tests: Execute the identified test cases again on the fixed version of the software.

- Observe and Document: Carefully observe the system’s behavior and document the results.

- Expected Outcome: The previously failing test cases should now pass, indicating the bugs are fixed.

Analysis and Reporting:

- Bug Verification: If the bug persists, the issue isn’t truly resolved. Report it with detailed information for further investigation.

- New Bugs: If new bugs emerge during confirmation testing, report them for proper handling.

- Confirmation Report: Document the confirmation testing process, including re-tested features, results, and any newly discovered issues.

Reasons Confirmation Testing Differs from All Other Testing Types.

Confirmation testing stands out from all other testing types due to several key reasons:

- Focus on Specific Requirements: This testing is designed to validate that a specific requirement or feature has been implemented correctly rather than testing the overall functionality of a system.

- Narrow Scope: Unlike other testing types that may cover a wide range of scenarios, confirmation testing is focused on verifying specific criteria and ensuring they meet the desired specifications.

- Limited Resources: This testing is often conducted with limited time and resources. It is typically performed towards the end of the development cycle to confirm that all requirements have been met.

- Risk-Based Approach: This testing prioritizes high-risk areas or critical functionalities, promptly identifying and addressing potential issues.

Features of Confirmation Testing

- It requires planning and effort from the development and the testing team.

- It is not necessary to create new test cases, as the same ones that led to finding bugs will be run again after debugging. Read more on – Debugging Tools

- It is often performed before regression testing, so as to check that any existing modules have not been affected when fixing the bug.

- Aims to increase software success rate by ensuring that an assuredly bug-free piece of software goes through the funnel.

- Confirm if the bug(s) still exist in any shape or form after the debugging exercise.

Advantages of Confirmation Testing

- Confirmation tests ensure that an application works at the highest possible performance levels. These tests literally double-check if any existing bugs remain or if new bugs have shown up after debugging. With the right confirmation tests in place, bugs will not get through to deployment.

- These tests ensure that the software is bug-free and that its functionality is never disrupted in the hands of users.

- Confirmation tests are easy to execute because you literally run it twice. There is no need to create specific test cases or scripts.

- These tests are often executed right before regression tests. This is done to ensure that specific modules are bug-free before the regression test checks for any disruptions to the larger system.

- It also helps with the earlier detection of major or minor bugs.

Disadvantages of Confirmation Testing

- Confirmation tests must be run manually since they cannot usually be automated due to uncertainties with defect resolution. Manual testing inevitably stretches timelines and can delays product releases.

- Generally, it is a best practice to run regression tests after confirmation tests to check the application’s overall viability. But running regression tests takes a fair amount of time, often overnight.

Challenges in Confirmation Testing

- These tests are change-oriented. For every confirmation test, testers have to run regression tests, which is a real sink of time. As mentioned above, this can lengthen time-to-market, which is never a good thing.

- Confirmation tests usually check if pre-existing bugs are still plaguing the software. They hardly ever find new bugs. Identifying new bugs requires a separate set of tests, which pushes more workload onto tests, and the devs to has to fix those new bugs. This is quite a gap in the scope of testing.

- Once again, as mentioned above, confirmation tests can usually only be manually executed. Every time a bug is fixed, these tests have to verify that they really are gone. Given that most modern software is fairly complex and layered, multiple bugs will show up at any given time. Imagine running confirmation tests for all those bugs, and you’ll see the problem.

How to Perform Confirmation Testing with Testsigma?

Testsigma is easily integrated with confirmation testing workflows, simplifying its use. Read about the step-by-step process and view related screenshots.

Identify Test Cases:

Pinpoint the tests that exposed bugs in your test or previous Testsigma runs.

Prepare Test Data:

Ensure you have the same or similar data that triggered the bugs initially.

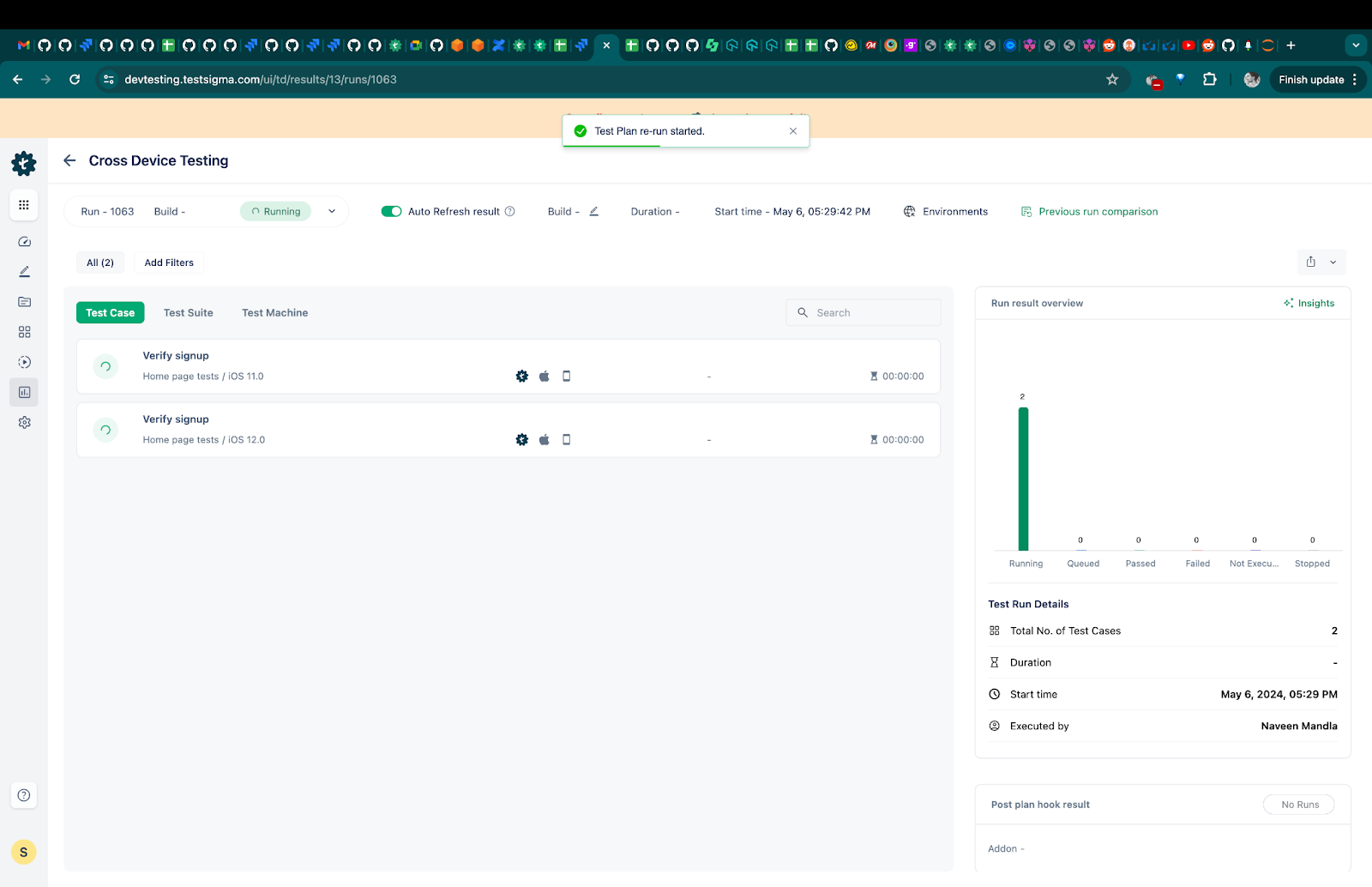

Run Existing Test Plan:

Instead of creating a new test plan specifically for confirmation, simply re-run the existing test plan that contains the identified test cases. Testsigma allows re-running plans or specific test suites within a plan.

Here is a screenshot of the same plan being rerun.

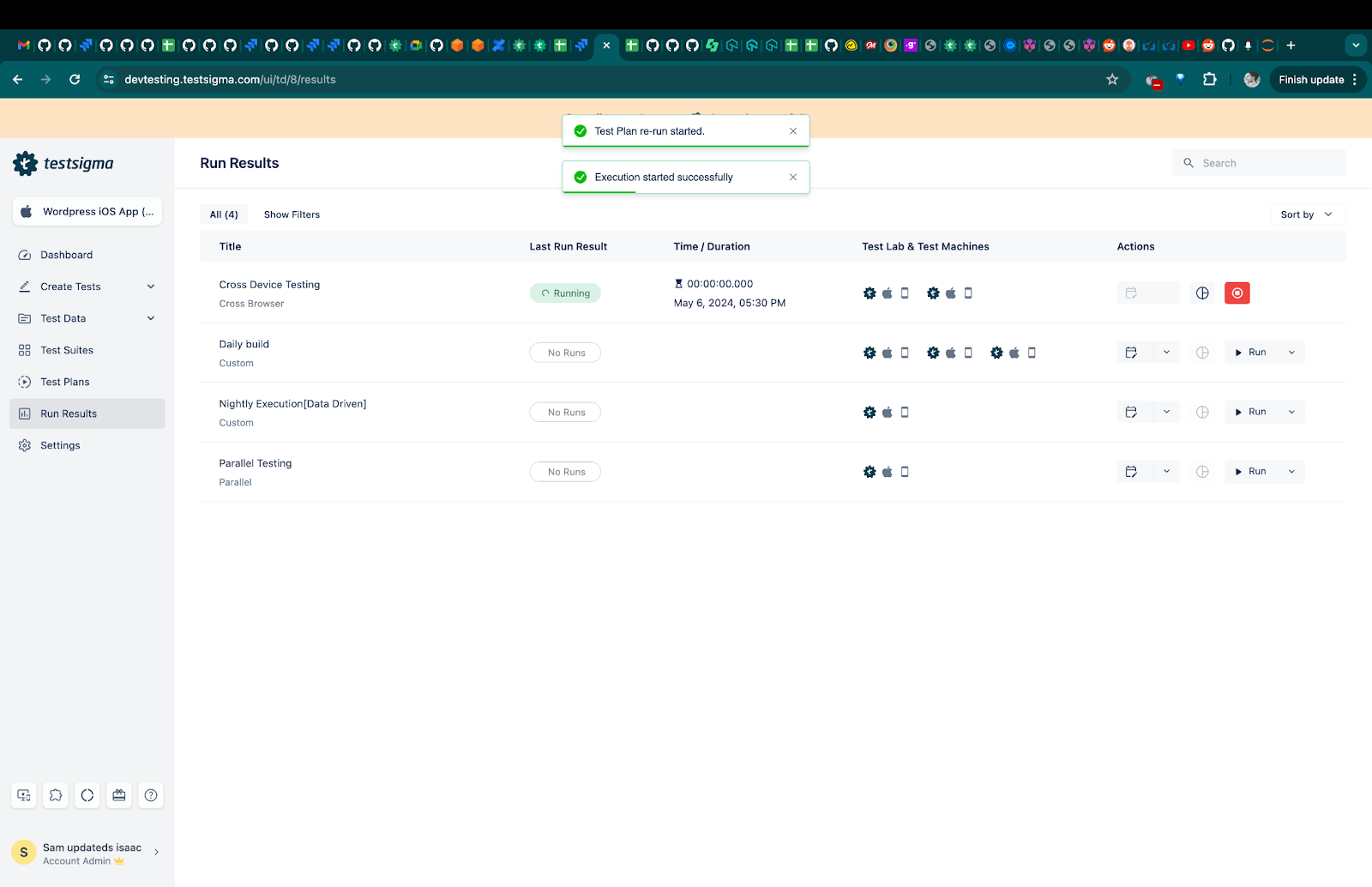

Analyze Results:

Pay close attention to the results of the previously failing tests. Look for any signs of the bugs persisting. Testsigma’s reports will highlight failures.

Report Bugs:

If a previously failing test still fails, the bug might not be fixed. Report it with details for further investigation.

You can see a successful Test Run in the screenshot below.

Report New Issues:

If new bugs emerge during confirmation testing, report them using the same process.

How to Run Confirmation Testing?

Unlike most other software tests, confirmation testing doesn’t have any specific techniques. You literally just run the same tests twice. As soon as a bug has been resolved, put the software module through the same tests that led to the discovery of the bug in the first place.

If the same bug (or new ones) do not emerge and all confirmation tests pass, you’re done. If not, testers must re-examine emerging bugs, reproduce them, and provide deeper, more detailed reports to devs. Reappearing bugs can indicate deeper flaws in the underlying system.

Note: If you need to execute these tests multiple times in the future then they become a good candidate for test automation.

What to Do After Confirmation Testing?

Once confirmation tests have confirmed that no live bugs exist in the application, the software can be moved further along the development pipeline. You simply push it to the next stage of testing/deployment.

The whole point of this testing is to ensure the accuracy of bug elimination, thus making the software more reliable and worthy of customers’ positive attention.

However, often, confirmation tests are immediately followed by regression tests. Since one or more bug fixes have been implemented on the software, the regression tests check that these changes haven’t negatively impacted any of the software functions that were working perfectly well before debugging took place.

Conclusion

Confirmation tests are a necessary (if somewhat inconvenient) fail-safe that testing cycles need to push out truly bug-free products. The challenge lies in designing and scheduling these tests without stretching the timelines to unacceptable levels. However, the efficacy of these tests is beyond question, and they absolutely deserve a place of pride in your test suites.

Frequently Asked Questions

Is confirmation testing and regression testing the same?

No, Confirmation testing checks that previously identified bugs are actually eliminated after debugging. Regression tests check if the entire software system has been negatively affected by code changes.

What is the difference between confirmation testing and retesting?